Explainable Deep Learning for Interstial Alloys

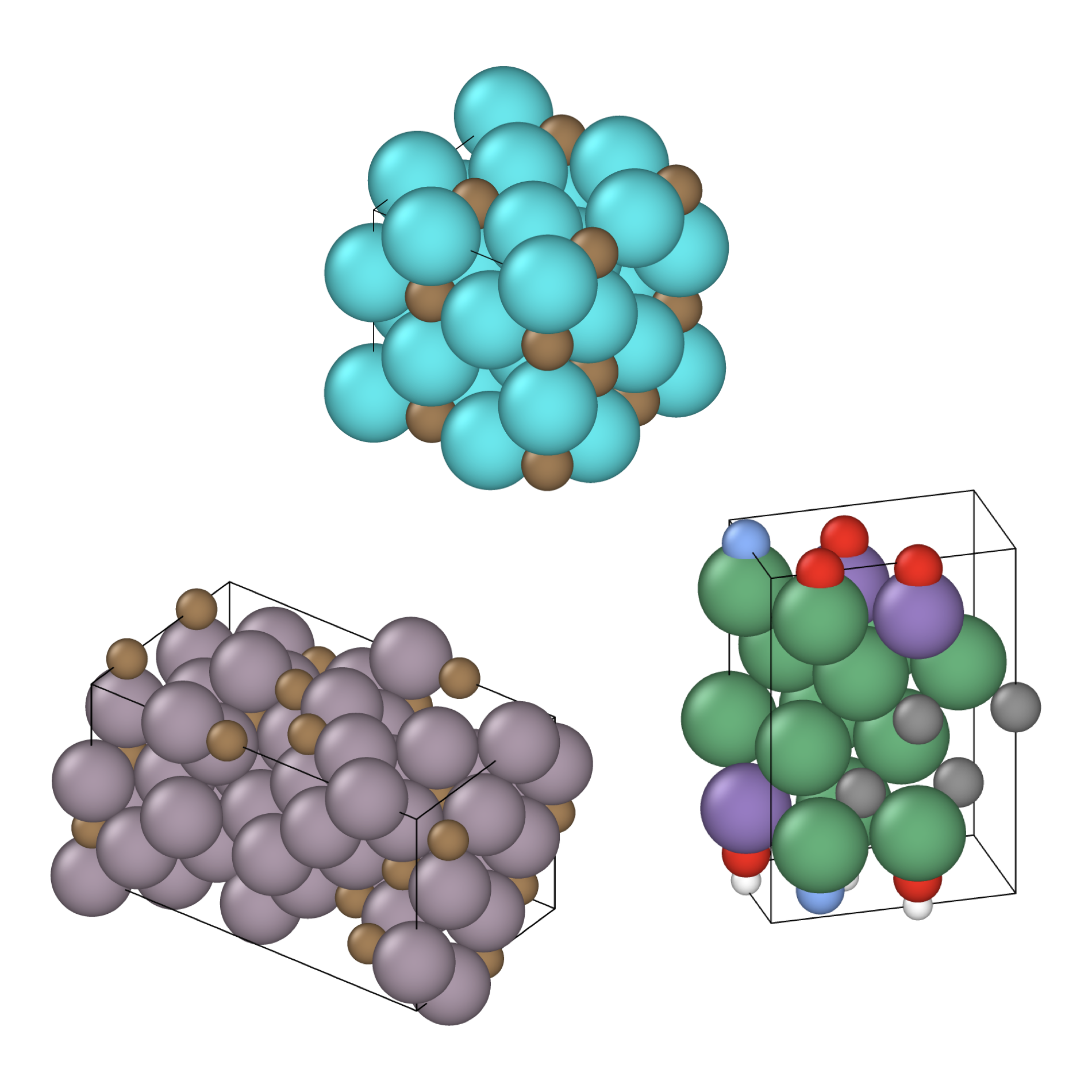

Unlike stoichiometric materials, interstitial-type alloys are characterized by the positioning of small atoms pseudo-radomly across the crystal lattice of larger atoms, in spaces known as interstices. While stoichiometric materials have been modelled by assuming the positioning of atom types in fixed allowed spaces within the lattice, this is not the case for interstitial materials. This presents a challenge for the development of explainability algorithms for understanding the complex relationships between the ordering of interstitial atoms and the properties of the material, which is the focus of this research.